Modern process control algorithms are the key to the success of industrial automation. The increased efficiency and quality create value that benefits everyone from the producers to the consumers.

The question then is, could we further improve it?

From AlphaGo to robot-arm control, deep reinforcement learning (DRL) tackled a variety of tasks that traditional control algorithms cannot solve. However, it requires a large and compactly sampled dataset or a lot of interactions with the environment to succeed.

In many cases, we need to verify and test the reinforcement learning in a simulator before putting it into production. However, there are few simulations for industrial-level production processes that are publicly available.

In order to pay back the research community and encourage future work on applying DRL to process control problems, we built and published a simulation playground with data for every interested researcher to play around with and benchmark their own controllers.

The simulators are all written in the easy-to-use OpenAI Gym format. Each of the simulations also has a corresponding data sampler, a pre-sampled d4rl-style dataset to train offline controllers, and a set of preconfigured online and offline Deep Learning algorithms.

For now, we have four simulators that are ready to be interacted with. The BeerFMTEnv, the PenSimEnv, the AtropineEnv and the ReactorEnv. Below are some details for each of them.

BeerFMTEnv

The BeerFMTEnv provides a typical simulation of the industry-level beer fermentation process. The end goal of which is to reach the stop condition (finish production) with a certain time limit, the quicker the better.

The only input that the simulation takes is the reaction temperature. To better assist any control algorithms, we also provided a canonical production process under this simulation, which was named and written in different ‘profiles’. Details could be found here.

PenSimEnv

The PenSimEnv aims to simulate the penicillin production process. The simulation itself is based on PenSimPy, and this blog has the best description of it.

AtropineEnv

The AtropineEnv simulates the atropine production process. We also provide it with a Model Predictive Controller (MPC) and an Economic Model Predictive Controller (EMPC) for benchmarking purposes. A much more detailed description can be found in this blog.

ReactorEnv

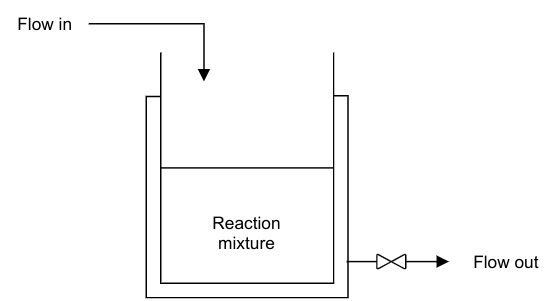

The ReactorEnv serves as a general Continuous Stirred Tank Reactor (CSTR) Process Model, which provides the necessary conditions for a chemical reaction to take place.

The ‘continuous’ here means that the reactants and products are continuously fed and withdrawn from the reactor respectively. It also comes with a prebuilt MPC and a Proportional–Integral–Derivative (PID) controller.

We believe that the QuarticGym provides a testbed that could accelerate research on advanced ai based algorithms like deep reinforcement learning for industrial controls. We will work continuously on this project to bridge the gap between deep learning-based control research and productization.

There are still more simulations to be added in the near future and everyone is welcome to take advantage of it or contribute to it!