In regulated and high-variability manufacturing environments like pharma, chemicals, and food production, traditional Statistical Process Control (SPC) tools are no longer enough. Control charts for single variables—while still useful—can’t capture the complexity of modern batch processes where dozens or hundreds of parameters interact over time.

Multivariate Statistical Process Control (MSPC) solves this problem by looking at process variables together, not in isolation. Instead of checking if one probe is drifting, MSPC checks whether the entire system is behaving normally, accounting for variation, correlation, and time evolution. It enables faster root cause analysis, predictive monitoring, and greater confidence in batch quality—whether the data comes from a fermenter, reactor, granulator, or beyond.

At its core, MSPC applies multivariate techniques—like PCA (Principal Component Analysis) and PLS (Partial Least Squares)—to batch process data. These techniques reduce high-dimensional datasets to just a few interpretable scores, capturing the dominant trends and variations across a process.

Why this matters:

To manage the complexity of batch processes, MSPC typically uses two modeling perspectives:

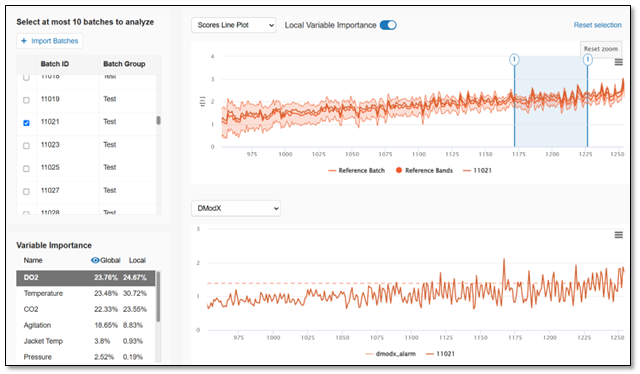

These monitor how variables evolve within a batch over time—phase by phase. BEMs are ideal for real-time tracking of batch performance, comparing each time point to the expected trajectory learned from past good batches. Operators and engineers get alerts if a batch starts to deviate mid-run, allowing for intervention before quality is impacted.

Figure 1: Batch evolution model with DmodX and feature contribution scores

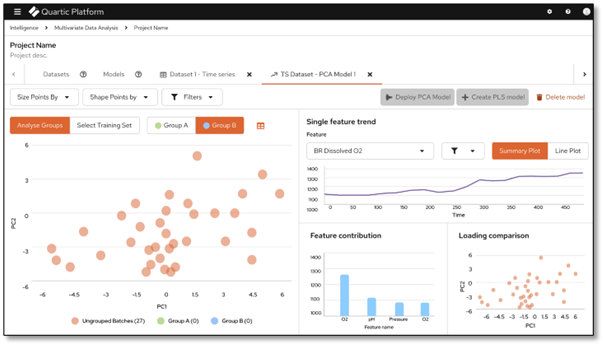

These models evaluate entire batches based on their final state (e.g., yield, potency, impurity levels). BLMs are useful for offline analysis, such as investigating historical variability or correlating input conditions with output quality. They can also predict the likely outcome of in-progress batches using partial data.

Figure 2: Batch level model using principal component analysis

By combining BEM and BLM, manufacturers can achieve both early warnings during the batch and predictive insights at batch completion—enabling smarter decisions across the production lifecycle.

Traditionally, batch data analysis happened offline—weeks after the batch finished—by specialists using tools like SIMCA or Minitab. While valuable for continuous improvement and root cause analysis, these methods were disconnected from real-time operations.

Modern MSPC tools break down that barrier.

Today, with cloud-native or hybrid architectures, MSPC models can be developed offline and then deployed online for continuous monitoring. The same PCA or PLS models used for investigations can power live dashboards, trigger alerts, and even feed advanced control systems.

Benefits of this unified workflow include:

Practical Example: Monitoring a Biopharma Fermentation

Imagine a fermentation process with 60 tracked variables—temperature, DO, agitator speed, base addition, optical density, and more—over a 5-day batch. Historically, deviations were only spotted after QA release, sometimes days later.

With a modern MSPC tool:

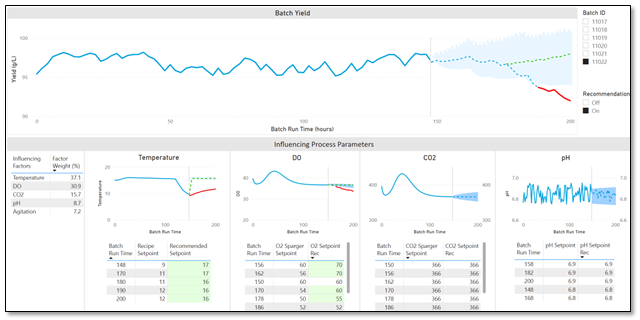

At the same time, a Batch Level Model predicts final yield and purity, comparing the in-progress batch against historical outcomes. Over time, these insights feed into process optimization and reduce cycle variability.

Figure 3: Biopharmaceutical BEM model with yield predictions

While SPC still has a role, MSPC brings powerful advantages for modern batch manufacturing:

| Traditional SPC | MSPC |

| Univariate | Multivariate |

| Static limits | Time-dependent limits |

| Works on single parameters | Captures complex interactions |

| Limited diagnostics | Advanced visualization (scores, contribution, loadings) |

| Hard to scale | Easily scalable and automated |

| Post-run QA focus | Real-time detection and early warning |

And when MSPC tools are integrated with existing data infrastructure—MES, historians, PAT tools—they become a central nervous system for process intelligence.

MSPC isn’t just a statistical upgrade to SPC—it’s a different way of thinking. It treats process data as a system of interrelated behaviors, not isolated control points. When deployed with modern tools that support offline development and online monitoring in a unified workflow, MSPC empowers process teams to move from reactive firefighting to proactive decision-making.

Whether you’re in life sciences, chemicals, or advanced materials, adopting modern MSPC means:

The complexity of batch processes is only growing. MSPC is how modern manufacturers keep up—without drowning in data.